Email Marketing – a high ROI medium

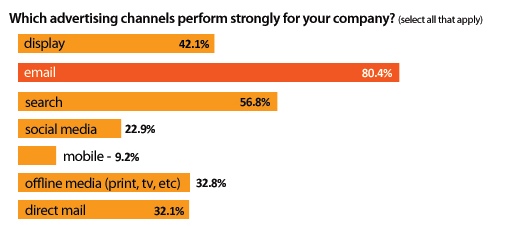

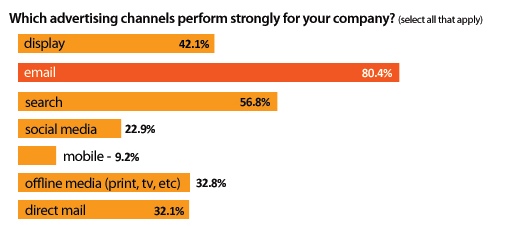

Many marketers now view email as the best-performing channel in terms of ROI. Email ROI was expected to reach about $45 for every dollar spent in 2008, more than twice the ROI of other mediums (source: marketingcharts.com). In a recent survey from Datran Media, 8 in 10 marketers cited email as one of the strongest advertising channels of their company.

(source: Datran Media, Annual Survey 2009)

The real cost of email marketing: unsubscriptions and spam-complaints

Unquestionably, the distribution cost for emails is incredibly low when compared to other channels. One can email one million customers with less than $500. Reaching the same number of customers by mail would cost around half a million dollars (1000 times more).

Due to the cost of mailing, one of the most critical question direct-mail marketers try to answer is: “Is this customer worth being mailed?”. RFM models or advanced response modeling algorithms have to be used to make sure an acceptable ROI is obtained every time.

In the email marketing world, this question is almost meaningless. The entire customer file can be emailed again and again with a very limited budget. For most businesses, a response rate of 0.01% is sufficient to make the ROI positive.

The real cost of an email campaign is not the distribution cost. It is the loss of potential revenue associated to email unsubscriptions and spam-complaints.

Finding a balance between more revenue today and diminished revenue for tomorrow

The more you email a list, the more revenue you can obtain immediately. If you email customers too frequently, you risk spamming them. This could lead them to get on unsubscriptions lists and eventually turning them away from you.

Kirill Popov and Loren McDonald from EmailLabs tell the story in clickz.com of a multichannel retailer who increased its email frequency from 5 messages a month to 12 messages a month. The revenue increased by 38% but the unsubscriptions rate more than doubled from 0.74% to 1.77%. While the actual figures may vary from a business to another, those numbers are in line with what we found during our years of practice.

Getting the good (more revenue) without the bad (unsubscriptions): How to determine the optimal email frequency?

It is surprising to see that very few companies determine optimal email frequency using facts and sound analysis. Most of the time, marketers define an email frequency based on common industry practices, personal experience, and gut feeling.

Email frequency is too important to be left untested. One can even argue there isn’t one optimal frequency. Every customer is different and requires a different email contact strategy.

The approach we usually follow at Agilone is three-fold:

- Build a 360 degree view of customers with sales data, web data, and email data

- Segment the email list based on customer potential value and email/web activity

- Determine the optimal frequency for each segment by using robust A/B testing techniques and a few formulas

Building a 360 degree view of customers with sales data, web data, and email data

The more we know about a customer, the better we are able to target and increase the relevance of our marketing efforts.

At Agilone, we have the technology and expertise to connect to various systems such as order management systems, web analytics solutions (Coremetrics, Omniture, etc.), email marketing solutions (Silverpop, ExactTarget, Responsys, etc). Connecting these dots, we build a robust foundation for all subsequent analytical work

Customer segmentation: Mailing more to high-potential highly-engaged customers

Different customers require different email frequencies. Agilone has defined two dimensions on which customers can be evaluated.

- Potential value: how much future revenue is expected from a customer/prospect

- Web/email level of activity: how responsive/engaged is a customer with regard to web and email activity (how often does the customer check the website, how many emails did they open, etc.)

The underlying idea here is that we should email more frequently the customers with high potential value, and who do open all the emails we send, check our website regularly and even forward our emails to friends.

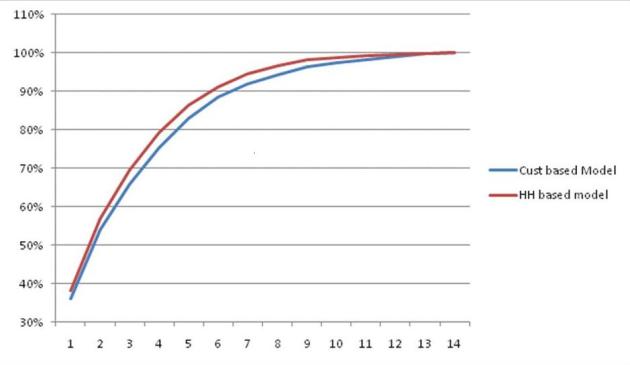

Using advanced statistical modeling, we give a composite score to all customers and prospects. The entire email list is segmented into 10 deciles that we can treat differently.

The top deciles show the best response rates and the lowest unsubscriptions rates when compared to the other deciles, for a same email frequency.

Email Frequency: testing, testing, testing…

Different frequencies can be tested within each of the segments defined above. Let’s say that for a particular segment, we divide the list in two. One half of the segment receives 8 emails per month (let’s call this list A). The second half receives 12 emails per month (list B). As one can expect, we get more revenue from list B but the unsubscriptions rate is lower for list A.

Which frequency should be preferred? Let’s consider the following formula.

Additional profit from higher email frequency =

+ Increase in revenue attributed to higher email frequency

– Additional distribution costs due to higher email frequency

– Additional creative costs due to higher email frequency

– Future revenue loss because of additional unsubscriptions (nb additional unsubscriptions x (future revenue from active email – future revenue from unsubscribed email))

This formula and its various components can produce some great insights.

We will give more examples and details on the three stages above very soon.

I hope you have enjoyed the article. Please continue the discussion by posting your comments here or by shooting an email at info@agilone.com

Read you soon!

Anselme LE VAN

Associate, Analytics | Agilone

Website: www.agilone.com

Revenue attribution is the hottest topic these days. Proliferation of online media, requires reshuffling marketing spend across many more spend categories. Traditional funnel-engineering type work is good, but static, and doesn’t address a few key issues.

Revenue attribution is the hottest topic these days. Proliferation of online media, requires reshuffling marketing spend across many more spend categories. Traditional funnel-engineering type work is good, but static, and doesn’t address a few key issues.